T-5: Difference between revisions

No edit summary |

|||

| (21 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

<strong>Goal: </strong> | <strong>Goal: </strong> | ||

In this set of problems, we characterize the energy landscape of the spherical <math>p</math>-spin by studying its metastable states (local minima). | |||

<br> | <br> | ||

<strong>Techniques: </strong> | <strong>Techniques: </strong> Kac-Rice formula, conditional probabilities. | ||

<br> | <br> | ||

== Dynamics, optimization, trapping local minima == | == Dynamics, optimization, trapping local minima == | ||

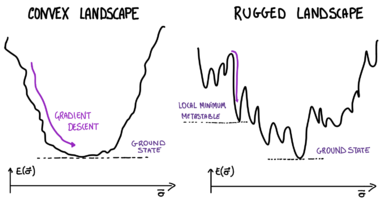

[[File:Landscapes-GDD.png|thumb|right|x200px| | [[File:Landscapes-GDD.png|thumb|right|x200px|Non-rugged vs rugged energy landscapes.]] | ||

So far we have discussed the equilibrium properties of disordered systems, that are encoded in their partition function/free energy. When a system (following Langevin, Monte Carlo dynamics) equilibrates at sufficiently large times, its long-time properties are captured by these equilibrium calculations. In glassy systems the equilibration timescales are extremely large: for very large timescales the system does not visit equilibrium configurations, but rather metastable states. | |||

<ul> | <ul> | ||

<li> '''Rugged landscapes.''' Consider the spherical <math>p</math>-spin model | <li> '''Rugged landscapes.''' Consider the spherical <math>p</math>-spin model: <math>E(\vec{\sigma})</math> is an <ins> energy landscape </ins>. It is a random function on configuration space (the surface <math> \mathcal{S}_N </math> of the sphere). This landscape has its global minimum(a) at the ground state configuration(s): the energy density of the ground state(s) can be obtained studying the partition function <math> Z </math> in the limit <math> \beta \to \infty </math>. Besides the ground state(s), the energy landscape can have other local minima; fully-connected models of glasses are characterized by the fact that there are plenty of these local minima: the energy landscape is <ins> rugged</ins>, see the sketch. | ||

</li> | </li> | ||

<br> | <br> | ||

| Line 38: | Line 40: | ||

== Problems == | == Problems == | ||

In | In this and in the following problem, we discuss the computation of the annealed complexity of the spherical <math>p</math>-spin model, which is defined by | ||

<center> <math> | <center> <math> | ||

\Sigma_{\text{a}}(\epsilon)= \lim_{N \to \infty}\frac{1}{N}\log \overline{\mathcal{N}(\epsilon)} , \quad \quad \mathcal{N}(\epsilon)= \left\{ \text{number stat. points of energy density } \epsilon\right\} | \Sigma_{\text{a}}(\epsilon)= \lim_{N \to \infty}\frac{1}{N}\log \overline{\mathcal{N}(\epsilon)} , \quad \quad \mathcal{N}(\epsilon)= \left\{ \text{number stat. points of energy density } \epsilon\right\} | ||

</math> </center> | </math> </center> | ||

=== Problem 5 | |||

=== Problem 5: the Kac-Rice formula and the complexity === | |||

| Line 78: | Line 81: | ||

<ol start="3"> | <ol start="3"> | ||

<li><em> Gaussianity and correlations.</em> Determine the distribution of the quantity <math> E (\vec{1})</math>. The entries of <math>\nabla_\perp E (\vec{1}), \nabla^2_\perp E (\vec{1})</math> are Gaussian variables. One can show that the components of <math> \nabla_\perp E (\vec{1})</math> are uncorrelated to <math> E (\vec{1}), \nabla^2_\perp E (\vec{1})</math> | <li><em> Gaussianity and correlations.</em> | ||

<ul> | |||

<li> Determine the distribution of the quantity <math> E (\vec{1})</math>. </li> | |||

<li> The entries of <math>\nabla_\perp E (\vec{1}), \nabla^2_\perp E (\vec{1})</math> are Gaussian variables. One can show that the <math> N-1 </math> components of <math> \nabla_\perp E (\vec{1})</math> are uncorrelated to <math> E (\vec{1}), \nabla^2_\perp E (\vec{1})</math>; they have zero mean and covariances | |||

<math> | <math> | ||

\overline{(\nabla_\perp E)_\alpha \, (\nabla_\perp E)_\beta}= | \overline{(\nabla_\perp E)_\alpha \, (\nabla_\perp E)_\beta}= p \, \delta_{\alpha \beta}+O\left(\frac{1}{N} \right). | ||

</math> | </math> | ||

</ | Compute the probability density that <math> \nabla_\perp E (\vec{1})=0</math>. </li> | ||

<li> The <math>(N-1)\times (N-1) </math> matrix <math> \nabla_\perp^2 E (\vec{\sigma}) </math> conditioned to the fact that <math> E(\vec 1)=N \epsilon </math> can be written as | |||

<center> | <center> | ||

<math> | <math> | ||

[\nabla_\perp^2 E(\vec{1}) | [\nabla_\perp^2 E(\vec{1})]_{\alpha \beta}= M_{\alpha \beta}- p \epsilon\, \delta_{\alpha \beta}, | ||

</math> | </math> | ||

</center> | </center> | ||

where the matrix <math> M </math> has random entries with zero average and correlations | where the matrix <math> M </math> has random entries with zero average and correlations | ||

<math> | <math> | ||

\overline{{M}_{\alpha \beta} \, {M}_{\gamma \delta}}= \frac{p (p-1)}{ | \overline{{M}_{\alpha \beta} \, {M}_{\gamma \delta}}= \frac{p (p-1)}{ N} \left( \delta_{\alpha \gamma} \delta_{\beta \delta}+ \delta_{\alpha \delta} \delta_{\beta \gamma}\right) | ||

</math> | </math> | ||

Combining this with the results above, show that | |||

Combining | |||

<center> | <center> | ||

<math> | <math> | ||

\overline{\mathcal{N}(\epsilon)}= (2 \pi e)^{\frac{N}{2}} \,\frac{1}{(\pi \, p)^{\frac{N-1}{2}}}\; \sqrt{\frac{N}{\pi}} e^{-N \epsilon^2}\;\overline{|\text{det} \left( M- p \epsilon \mathbb{I} \right)|} | \overline{\mathcal{N}(\epsilon)}= (2 \pi e)^{\frac{N}{2}} \,\frac{1}{(2 \pi \, p)^{\frac{N-1}{2}}}\; \sqrt{\frac{N}{2 \pi}} e^{-\frac{N \epsilon^2}{2}}\;\overline{|\text{det} \left( M- p \epsilon \mathbb{I} \right)|} | ||

</math> | </math> | ||

</center> | </center> | ||

</ul> | |||

</li> | </li> | ||

</ol> | </ol> | ||

<br> | <br> | ||

| Line 181: | Line 114: | ||

== Check out: key concepts == | == Check out: key concepts == | ||

Gradient descent, rugged landscapes, metastable states | Gradient descent, rugged landscapes, metastable states, landscape’s complexity. | ||

Latest revision as of 10:00, 12 March 2025

Goal:

In this set of problems, we characterize the energy landscape of the spherical -spin by studying its metastable states (local minima).

Techniques: Kac-Rice formula, conditional probabilities.

Dynamics, optimization, trapping local minima

So far we have discussed the equilibrium properties of disordered systems, that are encoded in their partition function/free energy. When a system (following Langevin, Monte Carlo dynamics) equilibrates at sufficiently large times, its long-time properties are captured by these equilibrium calculations. In glassy systems the equilibration timescales are extremely large: for very large timescales the system does not visit equilibrium configurations, but rather metastable states.

- Rugged landscapes. Consider the spherical -spin model: is an energy landscape . It is a random function on configuration space (the surface of the sphere). This landscape has its global minimum(a) at the ground state configuration(s): the energy density of the ground state(s) can be obtained studying the partition function in the limit . Besides the ground state(s), the energy landscape can have other local minima; fully-connected models of glasses are characterized by the fact that there are plenty of these local minima: the energy landscape is rugged, see the sketch.

- Optimization by gradient descent. Suppose that we are interested in finding the configurations of minimal energy, starting from an arbitrary configuration : we can implement a dynamics in which we progressively update the configuration moving towards lower and lower values of the energy, hoping to eventually converge to the ground state(s). The simplest dynamics of this sort is gradient descent,

where is the gradient of the landscape restricted to the sphere. The dynamics stops when it reaches a stationary point , a configuration where . If the landscape has a convex structure, this will be the ground state; if the energy landscape is very non-convex like in glasses, the end point of this algorithm will be a local minimum at energies much higher than the ground state (see sketch).

- Stationary points and complexity. To guess where gradient descent dynamics (or Langevin dynamics ) are expected to converge, it is useful to understand the distribution of the stationary points, i.e. the number of such configuration having a given energy density . In fully-connected models, this quantity has an exponential scaling, , where is the landscape’s complexity. [*] . Stationary points can be stable (local minima), or unstable (saddles or local maxima): their stability is encoded in the spectrum of the Hessian matrix : when all the eigenvalues of the Hessian are positive, the point is a local minimum (and a saddle otherwise).

- [*] - This quantity looks similar to the entropy we computed for the REM in Problem 1. However, while the entropy counts all configurations at a given energy density, the complexity accounts only for the stationary points.

Problems

In this and in the following problem, we discuss the computation of the annealed complexity of the spherical -spin model, which is defined by

Problem 5: the Kac-Rice formula and the complexity

- The Kac-Rice formula. Consider first a random function of one variable defined on an interval , and let be the number of points such that . Justify why

where is the probability density that is a zero of the function. In particular, why is the derivative of the function appearing in this formula? Consider now the number of stationary points of the -spin energy landscape, which satisfy . Justify why the generalization of the formula above gives

where is the probability density that is a stationary point of energy density , and is the Hessian matrix of the function restricted to the sphere.

- Statistical rotational invariance. Recall the expression of the correlations of the energy landscape of the -spin computed in Problem 3.1: in which sense the correlation function is rotationally invariant? Justify why rotational invariance implies that

where is one fixed vector belonging to the surface of the sphere. Where does the prefactor arise from?

- Gaussianity and correlations.

- Determine the distribution of the quantity .

- The entries of are Gaussian variables. One can show that the components of are uncorrelated to ; they have zero mean and covariances Compute the probability density that .

- The matrix conditioned to the fact that can be written as

where the matrix has random entries with zero average and correlations Combining this with the results above, show that

Check out: key concepts

Gradient descent, rugged landscapes, metastable states, landscape’s complexity.

![{\displaystyle [a,b]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9c4b788fc5c637e26ee98b45f89a5c08c85f7935)

![{\displaystyle [\nabla _{\perp }^{2}E({\vec {1}})]_{\alpha \beta }=M_{\alpha \beta }-p\epsilon \,\delta _{\alpha \beta },}](https://wikimedia.org/api/rest_v1/media/math/render/svg/61b1aeacc7ce5d176046e30a2ee93dd5a674083d)