T-I: Difference between revisions

| Line 11: | Line 11: | ||

<ul> | <ul> | ||

<li> We will consider random variables <math> X_N </math> which depend on a parameter <math> N \gg 1 </math> (the number of degrees of freedom: for a system of size <math>L</math> in dimension <math> d</math>, <math> N = L^d </math>) and which have the scaling <math> X_N \sim O(e^{N}) </math>; the scaling means that the rescaled variable <math> Y_N=N^{-1}\log |X_N| </math> has a well defined distribution that remains of <math> O(1) </math> when <math> N \to \infty </math>. A standard example are partition functions of disordered systems with <math> N </math> degrees of freedom, <math> Z \sim e^{-\beta N f} </math>: here <math> X_N \to Z </math> and <math> Y_N \to -\beta f </math>, where <math> f </math> is the free energy density. Let <math> P_N(x), P_N(y) </math> be the distributions of <math> X_N </math> and <math> Y_N </math>. | <li> '''exponentially scaling variables''' We will consider random variables <math> X_N </math> which depend on a parameter <math> N \gg 1 </math> (the number of degrees of freedom: for a system of size <math>L</math> in dimension <math> d</math>, <math> N = L^d </math>) and which have the scaling <math> X_N \sim O(e^{N}) </math>; the scaling means that the rescaled variable <math> Y_N=N^{-1}\log |X_N| </math> has a well defined distribution that remains of <math> O(1) </math> when <math> N \to \infty </math>. A standard example are partition functions of disordered systems with <math> N </math> degrees of freedom, <math> Z \sim e^{-\beta N f} </math>: here <math> X_N \to Z </math> and <math> Y_N \to -\beta f </math>, where <math> f </math> is the free energy density. Let <math> P_N(x), P_N(y) </math> be the distributions of <math> X_N </math> and <math> Y_N </math>. | ||

</li> | </li> | ||

<br> | <br> | ||

Revision as of 19:36, 8 January 2024

Goal: derive the equilibrium phase diagram of the simplest spin-glass model, the Random Energy Model (REM). The REM is defined assigning to each configuration of the system a random energy . The random energies are independent, taken from a Gaussian distribution

.

Key concepts: average value vs typical value, large deviations, rare events, saddle point approximation, self-averaging quantities, freezing transition, .

Some probability notions relevant at large N

- exponentially scaling variables We will consider random variables which depend on a parameter (the number of degrees of freedom: for a system of size in dimension , ) and which have the scaling ; the scaling means that the rescaled variable has a well defined distribution that remains of when . A standard example are partition functions of disordered systems with degrees of freedom, : here and , where is the free energy density. Let be the distributions of and .

- A random variable is self-averaging when, in the limit , its distribution concentrates around the average, collapsing to a delta function:

This happens when its fluctuations are small compared to the average, meaning that

When the random variable is not self-averaging, it remains distributed in the limit . The property of being self-averaging holds for the free energy of the systems we will consider. It is a very important property: the free energy (and therefore all the thermodynamics observables, that can be obtained taking derivatives of the free energy) does not fluctuate from sample to sample when is large. Notice that while quantities like (like the free energy density) are self-averaging, quantities scaling exponentially like (like the partition function) are not necessarily so, see below.

- For self-averaging quantities, the asymptotic value is their average value, which in general is also their typical value. In general, quantities like have a distribution that for large takes the form where is some positive function and . This is called a large deviation form for the probability distribution, with speed . This distribution is of for the value such that : this value is the typical value of ; all the other values of are associated to a probability that is exponentially small in : they are exponentially rare.

Averages over distributions having a large deviation form can usually be computed with the saddle point approximation for large . Let’s fix . If is a function of which scales slower than exponential of , thenbecause the integral is dominated by the region where , since all the other contributions are exponentially suppressed. This also implies (choose )

- Let us go back to : how to get from it? We have

since , and we have identified .

In the language of disordered systems, computing the typical value of through the average of its logarithm corresponds to performing a quenched average: from this average, one extracts the correct asymptotic value of the self-averaging quantity . - The quenched average does not necessarily coincide with the annealed average, defined as:

In fact, it always holds because of the concavity of the logarithm. One case in which the inequality is strict, and thus quenched and annealed averages are not the same, is when is not self-averaging, and its average value is typically much larger than the typical value at large (because the average is dominated by rare events). In this case, , and to get the correct limit of the self-averaging quantity one has to perform the quenched average.

Notice that not vice-versa: you can have quenched equals annealed without X being self averaging.

Problem 1.1: the energy landscape of the REM

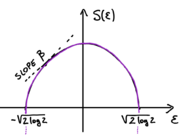

In this problem we study the random variable , that is the number of configurations having energy . We show that for large it scales as . We show that the typical value of , the quenched entropy of the model (see sketch), is given by:

The point where the entropy vanishes, , is the energy density of the ground state. The entropy is maximal at : the highest number of configurations have vanishing energy density.

- Averages: the annealed entropy. We begin by computing the annealed entropy , which is defined by the average . Compute this function using the representation [with if and otherwise]. When does coincide with ?

- Self-averaging. For the quantity is self-averaging: its distribution concentrates around the average value when . Show that ; by the central limit theorem, show that is self-averaging when . This is no longer true in the region where the annealed entropy is negative: why does one expect fluctuations to be relevant in this region?

- Rare events vs typical values. For the annealed entropy is negative: the average number of configurations with those energy densities is exponentially small in . This implies that the probability to get configurations with those energy is exponentially small in : these configurations are rare. Do you have an idea of how to show this, using the expression for ? What is the typical value of in this region? Justify why the point where the entropy vanishes coincides with the ground state energy of the model.

Problem 1.2: the free energy and the freezing transition

We now compute the equilibrium phase diagram of the model, and in particular the quenched free energy density which controls the scaling of the typical value of the partition function, . We show that the free energy equals to

At a transition occurs, often called freezing transition: in the whole low-temperature phase, the free-energy is “frozen” at the value that it has at the critical temperature .

- The thermodynamical transition and the freezing. The partition function the REM reads Using the behaviour of the typical value of determined in Problem 1.1, derive the free energy of the model (hint: perform a saddle point calculation). What is the order of this thermodynamic transition?

- Entropy. What happens to the entropy of the model when the critical temperature is reached, and in the low temperature phase? What does this imply for the partition function ?

- Fluctuations, and back to average vs typical. Similarly to what we did for the entropy, one can define an annealed free energy from : show that in the whole low-temperature phase this is smaller than the quenched free energy obtained above. Putting all the results together, justify why the average of the partition function in the low-T phase is "dominated by rare events".

Comment: the low-T phase of the REM is a frozen phase, characterized by the fact that the free energy is temperature independent, and that the typical value of the partition function is very different from the average value. In fact, the low-T phase is also a glass phase : it is a phase where a peculiar symmetry, the so called replica symmetry, is broken. We go back to this concepts in the next sets of problems.

![{\displaystyle E_{\alpha }\in [E,E+dE]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/743293b01383f5322ecc6b6bb9268ca083af88f4)