|

|

| Line 115: |

Line 115: |

| <!--'''Comment:''' this analysis of the landscape suggests that in the large <math> N </math> limit, the fluctuations due to the randomness become relevant when one looks at the bottom of their energy landscape, close to the ground state energy. We show below that this intuition is correct, and corresponds to the fact that the partition function <math> Z </math> has an interesting behaviour at low temperature.--> | | <!--'''Comment:''' this analysis of the landscape suggests that in the large <math> N </math> limit, the fluctuations due to the randomness become relevant when one looks at the bottom of their energy landscape, close to the ground state energy. We show below that this intuition is correct, and corresponds to the fact that the partition function <math> Z </math> has an interesting behaviour at low temperature.--> |

|

| |

|

| === Problem 1.2: freezing transition & glassiness ===

| |

| In this Problem we compute the equilibrium phase diagram of the model, and in particular the quenched free energy density <math>f </math> which controls the scaling of the typical value of the partition function, <math>Z \sim e^{-N \beta \, f +o(N) } </math>. We show that the free energy equals to

| |

| <center><math>

| |

| f =

| |

| \begin{cases}

| |

| &- \left( T \log 2 + \frac{1}{2 T}\right) \quad \text{if} \quad T \geq T_f\\

| |

| & - \sqrt{2 \,\log 2} \quad \text{if} \quad T <T_f

| |

| \end{cases} \quad \quad T_f= \frac{1}{ \sqrt{2 \, \log 2}}.

| |

| </math></center>

| |

| At <math> T_f </math> a transition occurs, often called freezing transition: in the whole low-temperature phase, the free-energy is “frozen” at the value that it has at the critical temperature <math>T= T_f </math>. <br>

| |

| We also characterize the overlap distribution of the model: the overlap between two configurations <math> \vec{\sigma}^\alpha, \vec{\sigma}^\beta </math> is

| |

| <math>q_{\alpha \, \beta}= N^{-1} \sum_{i=1}^N \sigma_i^\alpha \, \sigma_i^\beta</math>, and its distribution is

| |

| <center>

| |

| <math>

| |

| P(q)= \sum_{\alpha, \beta} \frac{e^{-\beta E(\vec{\sigma}^\alpha)}}{Z}\frac{e^{-\beta E(\vec{\sigma}^\beta)}}{Z}\delta(q-q_{\alpha \beta}).

| |

| </math>

| |

| </center>

| |

|

| |

|

|

| |

|

| |

| <ol>

| |

| <li><em> The freezing transition.</em>

| |

| The partition function the REM reads

| |

| <math>

| |

| Z = \sum_{\alpha=1}^{2^N} e^{-\beta E_\alpha}= \int dE \, \mathcal{N}(E) e^{-\beta E}.

| |

| </math>

| |

| Using the behaviour of the typical value of <math> \mathcal{N} </math> determined in Problem 1.1, derive the free energy of the model (hint: perform a saddle point calculation). What is the order of this thermodynamic transition?

| |

| </ol>

| |

| </li>

| |

| <br>

| |

|

| |

| <ol start="2">

| |

| <li><em> Fluctuations, and back to average vs typical.</em> Similarly to what we did for the entropy, one can define an annealed free energy <math> f_{\text{a}} </math> from <math> \overline{Z}=e^{- N \beta f_{\text{a}} + o(N)} </math>: show that in the whole low-temperature phase this is smaller than the quenched free energy obtained above. Putting all the results together, justify why the average of the partition function in the low-T phase is "dominated by rare events".

| |

| </ol></li>

| |

| <br>

| |

|

| |

| <ol start="3">

| |

| <li> <em> Entropy catastrophe.</em> What happens to the entropy of the model when the critical temperature is reached, and in the low temperature phase? What does this imply for the partition function <math> Z</math>?</li>

| |

| </ol>

| |

| <br>

| |

|

| |

|

| |

| <ol start="4"><li><em> Overlap distribution and glassiness.</em>

| |

| Justify why in the REM the overlap typically can take only values zero and one, leading to

| |

| <center>

| |

| <math>

| |

| P(q)= I_2 \, \delta(q-1)+ (1-I_2) \, \delta(q), \quad \quad I_2= \frac{\sum_\alpha z_\alpha^2}{\left(\sum_\alpha z_\alpha\right)^2}, \quad \quad z_\alpha=e^{-\beta E_\alpha}

| |

| </math>

| |

| </center>

| |

| Why <math> I_2 </math> can be interpreted as a probability? Show that

| |

| <center>

| |

| <math>

| |

| \overline{I_2} =

| |

| \begin{cases}

| |

| 0 \quad &\text{if} \quad T>T_f\\

| |

| 1-\frac{T}{T_f} \quad &\text{if} \quad T \leq T_f

| |

| \end{cases}

| |

| </math>

| |

| </center>

| |

| and interpret the result. In particular, why is this consistent with the entropy catastrophe? <br>

| |

| <em> Hint: </em> For <math> T<T_f </math>, use that <math> \overline{I_2}=\frac{T_f}{T} \frac{d}{d\mu}\log \int_{0}^\infty (1-e^{-u -\mu u^2})u^{-\frac{T}{T_f}-1} du\Big|_{\mu=0}</math></li> </ol>

| |

| <br>

| |

|

| |

| '''Comment:''' the low-T phase of the REM is a frozen phase, characterized by the fact that the free energy is temperature independent, and that the typical value of the partition function is very different from the average value. In fact, the low-T phase is also <em> a glass phase </em> with <math> q_{EA}=1</math>. This is also a phase where a peculiar symmetry, the so called replica symmetry, is broken. We go back to this concepts in the next sets of problems.

| |

| <br>

| |

|

| |

|

| == Check out: key concepts == | | == Check out: key concepts == |

Goal: derive the equilibrium phase diagram of the simplest spin-glass model, the Random Energy Model (REM).

Techniques: saddle point approximation, Legendre transform, probability theory.

A dictionary for large-N disordered systems

- Exponentially scaling variables. We will consider positive random variables

which depend on a parameter

which depend on a parameter  (the number of degrees of freedom: for a system of size

(the number of degrees of freedom: for a system of size  in dimension

in dimension  ,

,  ) and which have the scaling

) and which have the scaling  : this means that the rescaled variable

: this means that the rescaled variable  has a well defined distribution that remains of

has a well defined distribution that remains of  when

when  . The standard example we have in mind are the partition functions of disordered systems with

. The standard example we have in mind are the partition functions of disordered systems with  degrees of freedom,

degrees of freedom,  : here

: here  and

and  , where

, where  is the free energy density. Let

is the free energy density. Let  be the distributions of

be the distributions of  and

and  .

.

- Self-averaging. A random variable

is self-averaging when, in the limit

is self-averaging when, in the limit  , its distribution concentrates around the average, collapsing to a deterministic value:

, its distribution concentrates around the average, collapsing to a deterministic value:

This happens when its fluctuations are small compared to the average, meaning that [*]

When the random variable is not self-averaging, it remains distributed in the limit  . When it is self-averaging, sample-to-sample fluctuations are suppressed when

. When it is self-averaging, sample-to-sample fluctuations are suppressed when  is large. This property holds for the free energy of all the disordered systems we will consider. This is very important property: it implies that the free energy (and therefore all the thermodynamics observables, that can be obtained taking derivatives of the free energy) does not fluctuate from sample to sample when

is large. This property holds for the free energy of all the disordered systems we will consider. This is very important property: it implies that the free energy (and therefore all the thermodynamics observables, that can be obtained taking derivatives of the free energy) does not fluctuate from sample to sample when  is large, and so the physics of the system does not depend on the particular sample. Notice that while intensive quantities like

is large, and so the physics of the system does not depend on the particular sample. Notice that while intensive quantities like  (like the free energy density) are self-averaging, quantities scaling exponentially like

(like the free energy density) are self-averaging, quantities scaling exponentially like  (like the partition function) are not necessarily so, see below.

(like the partition function) are not necessarily so, see below.

- Average and typical. The typical value of a random variable is the value at which its distribution peaks (it is the most probable value). For self-averaging quantities, in the limit

average and typical value coincide. In general, it might not be so!

average and typical value coincide. In general, it might not be so!

We discuss an example to fix the ideas. Often, quantities like  have a distribution that for large

have a distribution that for large  takes the form

takes the form  where

where  is some positive function and

is some positive function and  . This is called a large deviation form for the probability distribution, with speed

. This is called a large deviation form for the probability distribution, with speed  . This distribution is of

. This distribution is of  for the value

for the value  such that

such that  : this value is the typical value of

: this value is the typical value of  (asymptotically at large

(asymptotically at large  ); all the other values of

); all the other values of  are associated to a probability that is exponentially small in

are associated to a probability that is exponentially small in  : they are exponentially rare.

Consider now an exponentially scaling quantity like

: they are exponentially rare.

Consider now an exponentially scaling quantity like  , and let’s fix

, and let’s fix  . The asymptotic typical values

. The asymptotic typical values  and

and  are related by:

are related by:

so the scaling of  is

is  . Let us now look at the scaling of the average.

The average of

. Let us now look at the scaling of the average.

The average of  can be computed with the saddle point approximation for large

can be computed with the saddle point approximation for large  :

:

![{\displaystyle {\overline {X_{N}}}=\int dy\,P_{Y_{N}}(y)\,e^{Ny}=\int dy\,e^{N[y-g(y)]+o(N)}=e^{N[y^{*}-g(y*)]+o(N)},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/863cbb8a0ef73bd07e13aca72eccc31e9a881846)

where  is the point maximising the shifted function

is the point maximising the shifted function  . In this example,

. In this example,  : the asymptotic of the average value of

: the asymptotic of the average value of  is different from the asymptotic of the typical value. In particular, the average is dominated by rare events, i.e. realisations in which

is different from the asymptotic of the typical value. In particular, the average is dominated by rare events, i.e. realisations in which  takes the value

takes the value  , whose probability of occurrence is exponentially small.

, whose probability of occurrence is exponentially small.

- Quenched averages. Let us go back to

: how to get

: how to get  from it? When

from it? When  is self-averaging,

is self-averaging,

where in the last line we have used that  .

.

In the language of disordered systems, computing the typical value of  through the average of its logarithm corresponds to performing a quenched average: from this average, one extracts the correct asymptotic value of the self-averaging quantity

through the average of its logarithm corresponds to performing a quenched average: from this average, one extracts the correct asymptotic value of the self-averaging quantity  .

.

- Annealed averages. The quenched average does not necessarily coincide with the annealed average, defined as:

In fact, it always holds  because of the concavity of the logarithm.

When the inequality is strict and quenched and annealed averages are not the same, it means that

because of the concavity of the logarithm.

When the inequality is strict and quenched and annealed averages are not the same, it means that  is not self-averaging, and its average value is exponentially larger than the typical value (because the average is dominated by rare events). In this case, to get the correct limit of the self-averaging quantity

is not self-averaging, and its average value is exponentially larger than the typical value (because the average is dominated by rare events). In this case, to get the correct limit of the self-averaging quantity  one has to perform the quenched average.[**] This is what happens in the glassy phases discussed in these TDs.

one has to perform the quenched average.[**] This is what happens in the glassy phases discussed in these TDs.

- [*] - See here for a note on the equivalence of these two criteria.

- [**] - Notice that the opposite is not true: one can have situations in which the partition function is not self-averaging, but still the quenched free energy coincides with the annealed one.

Problems

These problems deal with the Random Energy Model (REM). The REM has been introduced in [1] . In the REM the system can take  configurations

configurations  with

with  . To each configuration

. To each configuration  is assigned a random energy

is assigned a random energy  . The random energies are independent, taken from a Gaussian distribution

. The random energies are independent, taken from a Gaussian distribution

Problem 1.1: the energy landscape of the REM

Entropy of the Random Energy Model

In this problem we study the random variable  , that is the number of configurations having energy

, that is the number of configurations having energy ![{\displaystyle E_{\alpha }\in [E,E+dE]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/743293b01383f5322ecc6b6bb9268ca083af88f4) . For large

. For large  this variable scales as

this variable scales as  . Let

. Let  . We show that the typical value of

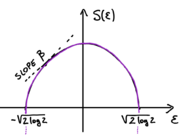

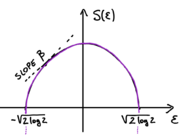

. We show that the typical value of  , the quenched entropy density of the model (see sketch), is given by:

, the quenched entropy density of the model (see sketch), is given by:

The point where the entropy vanishes,  , is the energy density of the ground state. The entropy is maximal at

, is the energy density of the ground state. The entropy is maximal at  : the highest number of configurations have vanishing energy density.

: the highest number of configurations have vanishing energy density.

- Averages: the annealed entropy. We begin by computing the annealed entropy

, which is defined by the average

, which is defined by the average  . Compute this function using the representation

. Compute this function using the representation  [with

[with  if

if ![{\displaystyle E_{\alpha }\in [E,E+dE]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/743293b01383f5322ecc6b6bb9268ca083af88f4) and

and  otherwise].

otherwise].

- Self-averaging. For

the quantity

the quantity  is self-averaging: its distribution concentrates around the average value

is self-averaging: its distribution concentrates around the average value  when

when  . Show this by computing the second moment

. Show this by computing the second moment  . Deduce that

. Deduce that  when

when  . This property of being self-averaging is no longer true in the region where the annealed entropy is negative: why does one expect fluctuations to be relevant in this region?

. This property of being self-averaging is no longer true in the region where the annealed entropy is negative: why does one expect fluctuations to be relevant in this region?

- Rare events. For

the annealed entropy is negative: the average number of configurations with those energy densities is exponentially small in

the annealed entropy is negative: the average number of configurations with those energy densities is exponentially small in  . This implies that the probability to get configurations with those energy is exponentially small in

. This implies that the probability to get configurations with those energy is exponentially small in  : these configurations are rare. Do you have an idea of how to show this, using the expression for

: these configurations are rare. Do you have an idea of how to show this, using the expression for  What is the typical value of

What is the typical value of  in this region? Putting everything together, derive the form of the typical value of the entropy density. Why the point where the entropy vanishes coincides with the ground state energy of the model?

in this region? Putting everything together, derive the form of the typical value of the entropy density. Why the point where the entropy vanishes coincides with the ground state energy of the model?

Check out: key concepts

Self-averaging, average value vs typical value, large deviations, rare events, saddle point approximation, freezing transition, overlap distribution.

To know more

- Derrida. Random-energy model: limit of a family of disordered models [1]

The terms “quenched” and “annealed” come from metallurgy and refer to the procedure in which you cool a very hot piece of metal: a system is quenched if it is cooled very rapidly (istantaneously changing its environment by putting it into cold water, for instance) and has to adjusts to this new fixed environment; annealed if it is cooled slowly, kept in (quasi)equilibrium with its changing environment at all times. Think now at how you compute the free energy, and at disorder as the environment. In the quenched protocol, you compute the average over configurations of the system keeping the disorder (environment) fixed, so the configurations have to adjust to the given disorder. Then you take the log and only afterwards average over the randomness (not even needed, at large  , if the free-energy is self-averaging). In the annealed protocol instead, the disorder (environment) and the configurations are treated on the same footing and adjust to each others, you average over both simultaneously.

, if the free-energy is self-averaging). In the annealed protocol instead, the disorder (environment) and the configurations are treated on the same footing and adjust to each others, you average over both simultaneously.

![{\displaystyle {\overline {X_{N}}}=\int dy\,P_{Y_{N}}(y)\,e^{Ny}=\int dy\,e^{N[y-g(y)]+o(N)}=e^{N[y^{*}-g(y*)]+o(N)},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/863cbb8a0ef73bd07e13aca72eccc31e9a881846)

![{\displaystyle E_{\alpha }\in [E,E+dE]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/743293b01383f5322ecc6b6bb9268ca083af88f4)